|

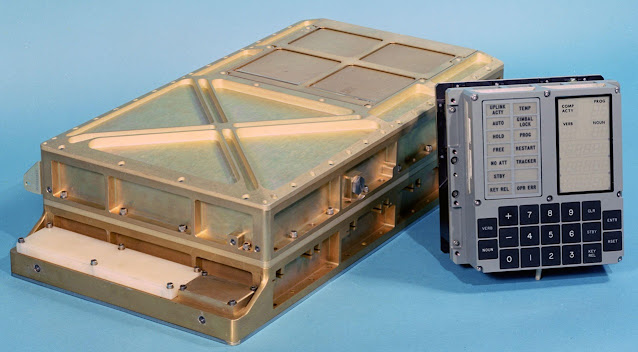

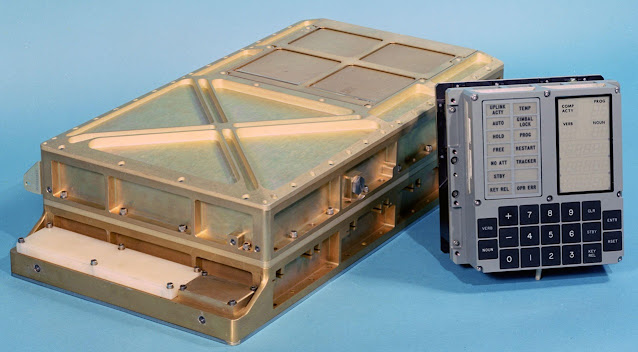

| The Apollo Guidance Computer and the DSKY |

|

| My Apollo display and keyboard unit aka DSKY |

|

| The Apollo Guidance Computer and the DSKY |

|

| My Apollo display and keyboard unit aka DSKY |

|

| My space shuttle Atlantis (orbiter vehicle designation: OV‑104) with a swatch of its space-flown cargo bay liner. |

|

| Photo of me from 1982 that the AI generated the video from |

|

| A photo from the Feb. 17, 1958, Minneapolis Star newspaper article showing the Duluth SAGE Direction Center (DC-10). |

|

| The former Duluth SAGE building as it stands today, repurposed into the Natural Resources Research Institute. |

He immigrated to the US and settled in St. Paul, where he worked as a clock and watchmaker at A. L. Haman & Co., located in the Endicott building at 352 Robert St., near the Telegraph Cable Company.

In 1895, Hummel invented the telediagraph, a transmission and reception apparatus similar to a fax machine that could send hand-drawn images over telegraph wires to a receiving station.

In April 1900, Pearson’s Magazine of London, England, described the telediagraph as consisting of two almost identical machines – the “transmitter” and the “receiver.”

Each machine features an eight-inch cylinder precisely operated by clockwork-like mechanisms.

A fine platinum stylus, similar to a telegraph key, rests above the transmitter and receiver’s cylinder.

The stylus is positioned to draw on tinfoil wrapped around the cylinder and with carbon paper on the receiver.

The telediagraph allowed artists and telegraphy operators to begin reproducing drawn images over telegraph wires, which allowed newspapers to print timely pictures with their stories.

On Dec. 6, 1897, the Minnesota’s St. Paul Globe newspaper published a front-page article on Hummel’s telediagraph transmissions of hand-drawn images over telegraph wires.

Today, I will paraphrase and comment on parts of the article:

“Tests conducted yesterday [Sunday] over the Northern Pacific railroad system [telegraph line] demonstrated the successful electrical reproduction of hand-drawn pictures at a distance with a new, locally invented device.

The pictures were reproduced using electrical currents over telegraph wires that spanned the greater part of northern Minnesota.

Ernest A. Hummel, a jeweler with Haman & Co. in this city, has worked on a mechanism for electric picture reproduction for two years.

Mr. Hummel’s device is complicated, combining three or four different motive powers.

[In 1897, ‘motive powers’ referenced springs, gears, batteries, electric generators, compressed air, and fuels like oil.]

Those who witnessed the tests at the Northern Pacific general office yesterday confirmed the accomplishment of his goal.

His invention makes picture transmission as feasible as sending written or spoken words.

Both the transmitter and receiver apparatus are primarily constructed from brass for durability and occupies a space similar to that of a typewriter.

Each transmitter and receiver included a small electric motor that operates the carriage and moves the copying pencils back and forth across the area to be copied.

The transmitter’s carriage includes a projecting arm with a sharp platinum point.

This point moves in precise increments over the image using an ingenious automatic clockwork system.

This adjustment is controlled by a screw and a series of ratchet mechanisms, springs, and gearwheels, precisely regulating the spacing between drawn lines.

When the machine is connected to the electric circuit and the platinum point is in motion, each encounter with non-conductive material momentarily breaks the circuit.

This break activates a sharp needle on the receiver to etch a corresponding line.

When the platinum point passes over the conductive material, the circuit closes, and the needle lifts.

This process requires precise accuracy adjustments for both transmitter and receiver instruments to function harmoniously.

While the electric motor drives the carriage, the clockwork controls its speed, using a system of cogs and whirling fans (similar to a steam engine governor but using disks instead of spheres).

Preliminary trial runs conducted three weeks ago showcased the system’s potential.

The main challenge was the slowness, with the pointer taking thirty-eight minutes to cross the image.

Since then, Mr. Hummel has refined his invention, devoting his spare time to adjusting the mechanical parts for increased speed.

Yesterday, at about 11 a.m., Mr. Hummel connected his experimental machine to the regular business wires of the railroad, which are less crowded on Sundays than on other days.

At two minutes past 11 a.m., the transmitter pointer started moving over the traced features of Adolph Luetgert, a notorious criminal from Chicago.

This action was done within an electric circuit that covered 288 miles, extending from St. Paul to Staples and back.

Six relays provided an extra resistance of 600 ohms, equivalent to 40 miles of wire.

Despite the 328-mile distance, the machine functioned flawlessly, and within sixteen minutes, the image of Luetgert was faithfully reproduced at the receiving station.

Several Northern Pacific officials, electricians, and others witnessed this successful test.”

On March 19, 1898, the Minneapolis Daily Times newspaper wrote “News Pictures by Telegraph,” regarding Hummel’s invention.

“What the telegraph and telephone are to the news-gathering end of a great newspaper, the new ‘likeness-sender,’ will be to the great illustration department of a modern journal,” the newspaper said.

On June 1, 1898, the Sacramento Record-Union newspaper reported Mr. Hummel’s invention was tested by officials from the New York Herald newspaper and that it clearly replicated a 4.5-inch tin plate drawing of New York Mayor Van Wyck created by a sketcher using shellac and alcohol.

The drawing was transmitted six miles over a telegraph wire and accurately reproduced on Hummel’s receiving apparatus in 22 minutes.

Ernest A. Hummel, who passed away Oct. 10, 1944, at 78, was laid to rest in Oakland Cemetery in St. Paul.

His groundbreaking work in telediagraph technology paved the way for significant progress in electrically transmitted visual communication.

|

| Ernest Hummel works with the telediagraph transmitter, while two other work with the receiver. |